The Technical Paradigm Shift: AI Moves from Scale-Driven Development to Practical Implementation

Executive Summary

The artificial intelligence landscape is undergoing a fundamental technical transformation as we enter 2026. After years of pursuing ever-larger language models through brute-force scaling, the industry is pivoting toward pragmatic implementation strategies that prioritize efficiency, specialized deployment, and real-world integration. This shift represents a maturation of AI technology from research-driven experimentation to production-ready solutions across multiple sectors.

The End of the Scaling Era

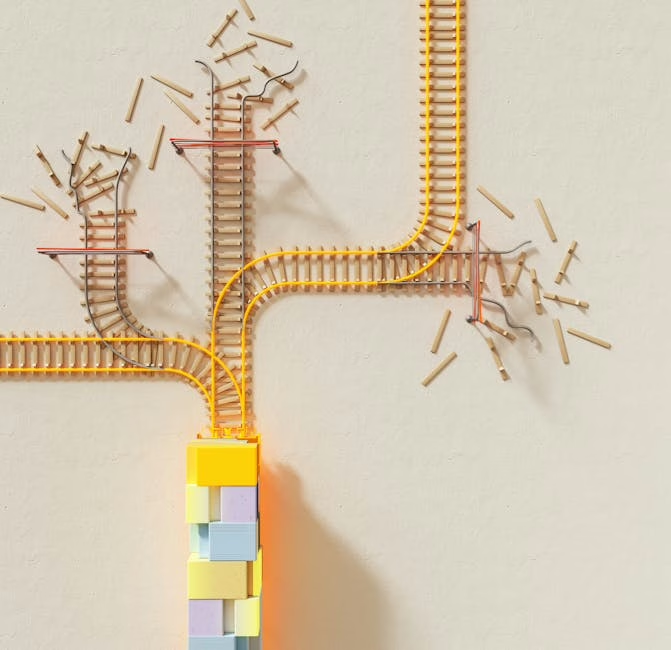

The paradigm that dominated AI development since AlexNet’s breakthrough in 2012 is reaching its practical limits. The traditional approach of scaling neural networks through increased parameter counts and computational resources—while technically impressive—has proven insufficient for widespread commercial deployment. The industry is now recognizing that architectural innovation, rather than pure scale, will drive the next wave of AI advancement.

This technical pivot involves several key methodological changes:

– Model Compression and Efficiency: Development of smaller, more efficient neural architectures that maintain performance while reducing computational overhead

– Domain-Specific Architectures: Custom neural network designs optimized for particular applications rather than general-purpose models

– Edge Computing Integration: Deployment of inference-optimized models on resource-constrained devices

Sector-Specific Technical Implementation

Pharmaceutical Industry: Regulatory-Compliant AI Systems

The pharmaceutical sector exemplifies the practical challenges of AI deployment in highly regulated environments. According to industry analysis, AI implementation in pharma has expanded beyond initial clinical development applications to encompass the entire drug lifecycle, including manufacturing process optimization, laboratory automation, and supply chain management.

The technical requirements in this sector are particularly stringent:

– Explainable AI Architectures: Models must provide interpretable decision pathways for regulatory compliance

– Validation Frameworks: Rigorous testing protocols that meet FDA and EMA standards

– Data Integrity Systems: Blockchain-integrated tracking for maintaining audit trails

The payoff for overcoming these technical hurdles is substantial: accelerated time-to-market for new drugs and reduced development costs through optimized clinical trial design and predictive modeling.

Hardware Infrastructure: The Foundation Layer

The continued growth in AI-adjacent semiconductor stocks reflects the critical importance of specialized hardware in enabling practical AI deployment. The technical demands of modern AI workloads require purpose-built silicon architectures:

– Tensor Processing Units (TPUs): Google’s custom ASICs optimized for matrix operations

– Neural Processing Units (NPUs): Dedicated inference engines for edge devices

– Memory-Centric Architectures: Processing-in-memory solutions that reduce data movement overhead

This hardware evolution is essential for supporting the transition from cloud-based training to distributed edge inference, enabling real-time AI applications across industries.

Architectural Innovation Over Brute Force

The technical community is increasingly focused on novel neural network architectures that achieve better performance-per-parameter ratios. Key research directions include:

Mixture of Experts (MoE) Models

These architectures activate only relevant subsets of parameters for specific inputs, dramatically improving efficiency while maintaining model capacity. Recent advances in routing algorithms and expert specialization have made MoE models practical for production deployment.

Transformer Alternatives

Researchers are exploring alternatives to the attention mechanism that reduce computational complexity from O(n²) to linear or sub-linear scaling. State Space Models (SSMs) and Mamba architectures show promise for long-sequence processing tasks.

Multimodal Integration

Advanced architectures that natively process multiple data modalities (text, image, audio, sensor data) without requiring separate encoding pipelines are becoming crucial for practical applications.

Human-AI Workflow Integration

The technical focus is shifting toward designing AI systems that augment rather than replace human capabilities. This requires sophisticated interface architectures that:

– Maintain Human Agency: Systems that provide recommendations while preserving human decision-making authority

– Contextual Adaptation: Models that adjust their behavior based on user expertise and task complexity

– Feedback Integration: Architectures that continuously learn from human corrections and preferences

Performance Metrics Evolution

The industry is moving beyond traditional benchmarks toward metrics that reflect real-world utility:

– Task-Specific Accuracy: Performance on domain-relevant problems rather than general benchmarks

– Latency and Throughput: Real-time performance characteristics for production environments

– Resource Efficiency: Performance per watt and performance per dollar metrics

– Reliability Measures: Consistency and failure mode analysis for mission-critical applications

Future Technical Trajectories

As we progress through 2026, several technical trends will shape AI development:

1. Federated Learning at Scale: Distributed training protocols that enable model improvement while preserving data privacy

2. Neuromorphic Computing: Brain-inspired hardware architectures that promise dramatic efficiency improvements

3. Quantum-Classical Hybrid Systems: Integration of quantum computing elements for specific optimization tasks

4. Automated Neural Architecture Search: AI-designed neural networks optimized for specific deployment constraints

Conclusion

The transition from hype-driven scaling to pragmatic implementation represents a natural evolution in AI technology maturation. This shift demands more sophisticated engineering approaches but promises more sustainable and broadly applicable AI solutions. The technical challenges are significant, but the potential for transformative impact across industries makes this one of the most exciting periods in AI development.

The success of this paradigm shift will ultimately be measured not by the size of our models, but by their practical utility in solving real-world problems efficiently and reliably.