The Technical Evolution of AGI: From Scaling Laws to Recursive Intelligence and Practical Implementation

As we approach 2026, the artificial general intelligence (AGI) research landscape is undergoing a fundamental transformation. The field is pivoting from the brute-force scaling paradigm that dominated the past decade toward more sophisticated architectural innovations and practical deployment strategies. This shift represents a maturation of AI research, moving beyond raw computational power to address the complex technical challenges of creating truly intelligent systems.

The End of the Scaling Era

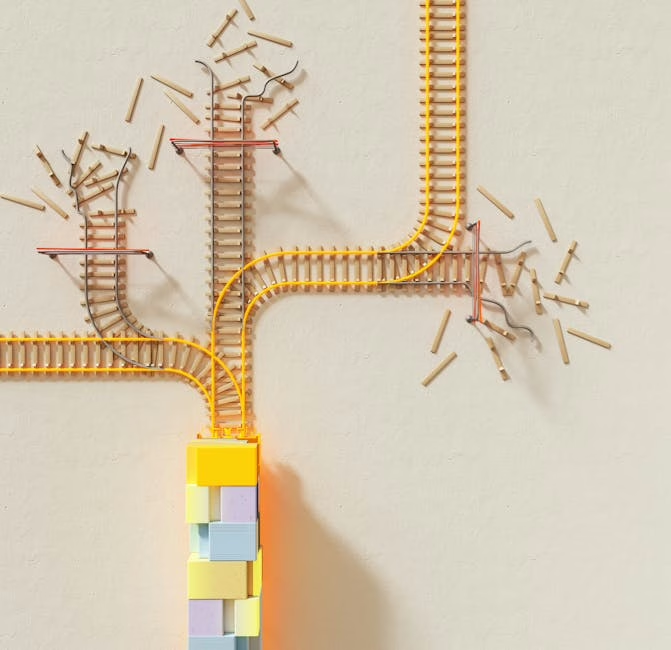

The traditional approach to advancing AI capabilities—simply building larger language models with more parameters and training data—is reaching its practical limits. As Alex Krizhevsky’s 2012 breakthrough demonstrated, scaling can yield remarkable results, but the current research trajectory suggests that continued scaling alone will not bridge the gap to AGI. The computational and economic costs of training ever-larger models are becoming prohibitive, while performance gains are showing diminishing returns.

This technical reality is driving researchers to explore fundamentally different approaches to intelligence. Rather than relying on parameter count as a proxy for capability, the focus is shifting toward architectural innovations that can more efficiently process information and solve complex, long-horizon problems.

Recursive Language Models: A Paradigm Shift in AI Architecture

One of the most significant technical breakthroughs emerging from this transition is Prime Intellect’s development of Recursive Language Models (RLMs). This innovative architecture represents a departure from traditional transformer-based approaches by implementing a general inference strategy that enables AI Systems Achieve Autonomous Learning and Medical Decision-Making: Technical Breakthroughs in…”>AI systems to manage their own context dynamically.

The technical significance of RLMs lies in their ability to handle long-horizon tasks through recursive processing. Unlike conventional language models that process input sequentially with fixed context windows, RLMs can recursively refine their understanding and maintain relevant information across extended problem-solving sessions. This architectural innovation addresses one of the fundamental limitations of current AI systems: the inability to maintain coherent reasoning across complex, multi-step problems.

The recursive approach allows the model to treat long prompts not as static input but as dynamic problem spaces that can be iteratively refined and explored. This represents a crucial step toward AGI, as it enables the kind of persistent, goal-directed reasoning that characterizes human intelligence.

Enterprise-Focused Research Trends

While breakthrough architectures like RLMs capture attention, parallel research streams are addressing the practical challenges of deploying AI in enterprise environments. Four key technical trends are emerging as critical for 2026:

Continual Learning Systems

Continual learning addresses the catastrophic forgetting problem that plagues current AI systems. Traditional neural networks, when trained on new data, tend to overwrite previously learned information. Advanced continual learning architectures employ techniques such as elastic weight consolidation, progressive neural networks, and meta-learning approaches to maintain knowledge while acquiring new capabilities.

The technical implementation involves sophisticated memory management systems that can selectively preserve important weights while adapting to new domains. This capability is essential for AGI systems that must continuously learn and adapt without losing core competencies.

Efficient Model Architectures

The trend toward smaller, more efficient models is driving innovations in model compression, knowledge distillation, and neural architecture search. Techniques such as pruning, quantization, and low-rank factorization are enabling the deployment of capable models on edge devices and in resource-constrained environments.

These architectural optimizations are particularly important for AGI development, as true general intelligence must be deployable across diverse computational environments, from mobile devices to embedded systems.

Multi-Modal Integration

Advanced AGI systems require the ability to process and integrate information across multiple modalities—text, images, audio, and sensor data. Current research is focusing on unified transformer architectures that can handle heterogeneous input types through shared representation spaces and cross-modal attention mechanisms.

The technical challenge lies in developing architectures that can learn meaningful correspondences between different data types while maintaining computational efficiency. Recent advances in vision-language models and audio-visual processing provide a foundation for more comprehensive multi-modal AGI systems.

Interpretable AI Systems

As AI systems become more capable, the need for interpretability becomes critical for enterprise deployment. Technical approaches include attention visualization, gradient-based explanation methods, and causal inference techniques that can provide insights into model decision-making processes.

For AGI systems, interpretability is not just a deployment requirement but a fundamental architectural consideration. Systems that can explain their reasoning processes are more likely to achieve the kind of transparent, verifiable intelligence that characterizes human cognition.

The Path Forward: Integration and Synthesis

The convergence of these technical trends suggests that the path to AGI will involve the synthesis of multiple architectural innovations rather than a single breakthrough. Recursive processing capabilities must be combined with continual learning systems, efficient architectures, multi-modal integration, and interpretability mechanisms to create truly general intelligent systems.

The technical challenges are substantial. Researchers must develop training methodologies that can effectively optimize these complex, multi-component architectures. New evaluation metrics and benchmarks are needed to assess performance across the diverse capabilities required for general intelligence.

Moreover, the transition from research prototypes to practical implementations requires addressing engineering challenges around scalability, reliability, and deployment in production environments. The gap between laboratory demonstrations and real-world applications remains significant.

Implications for AGI Development

These technical developments collectively point toward a more pragmatic approach to AGI research. Rather than pursuing intelligence through sheer scale, the field is embracing architectural sophistication and practical deployment considerations. This shift suggests that AGI may emerge not from a single monolithic system but from the integration of specialized, efficient components that can work together to solve complex problems.

The recursive processing capabilities demonstrated by RLMs, combined with continual learning systems and efficient multi-modal architectures, provide a technical foundation for systems that can approach human-level general intelligence. However, significant research challenges remain in areas such as common-sense reasoning, causal understanding, and creative problem-solving.

As we move toward 2026, the AGI research community is positioning itself for a more sustainable and technically grounded approach to artificial general intelligence. The focus on practical implementation, architectural innovation, and systematic integration of capabilities represents a maturation of the field that may ultimately prove more effective than the scaling-focused approaches of the past decade.