AI Semiconductor Market Surge: Technical Infrastructure Driving 2026’s Investment Rally

Market Momentum Reflects Technical Advancement

The artificial intelligence semiconductor sector has entered 2026 with remarkable momentum, marking the fourth consecutive year of substantial gains as investors recognize the critical role of specialized hardware in advancing AI capabilities. This sustained growth pattern indicates a fundamental shift in how the market values the technical infrastructure underlying modern AI systems.

The rally reflects deeper technical realities: as neural network architectures become increasingly complex and computationally demanding, the need for specialized silicon has intensified. Traditional von Neumann architectures prove insufficient for the parallel processing requirements of transformer models, graph neural networks, and emerging neuromorphic computing paradigms.

Strategic Market Positioning: Kunlunxin’s IPO Architecture

Baidu’s decision to spin off Kunlunxin as an independent entity and pursue a Hong Kong Stock Exchange listing represents a significant technical and strategic development in the AI chip landscape. This move comes at a critical juncture when China’s domestic AI infrastructure requirements are expanding exponentially.

Kunlunxin’s technical approach centers on domain-specific architectures optimized for deep learning workloads. Their chip designs incorporate specialized tensor processing units (TPUs) and memory hierarchies specifically engineered for the matrix multiplication operations that dominate neural network inference and training. The company’s focus on heterogeneous computing architectures—combining traditional CPU cores with AI-accelerated processing units—addresses the diverse computational requirements of modern AI applications.

The timing of this IPO reflects the maturation of China’s AI semiconductor ecosystem. As regulatory frameworks around AI development continue to evolve globally, having domestically-controlled AI infrastructure becomes increasingly strategic from both technical sovereignty and performance optimization perspectives.

Technical Innovation Driving Alphabet’s Market Performance

Google’s exceptional 2025 performance, achieving its best Wall Street results since 2009, demonstrates how technical innovation in AI translates directly to market confidence. The company’s response to competitive pressures involved deploying breakthrough methodologies across multiple AI domains.

Key technical developments that drove investor enthusiasm include advances in large language model efficiency, breakthrough work in multimodal AI architectures, and significant improvements in neural architecture search (NAS) methodologies. Google’s technical teams have made substantial progress in model compression techniques, enabling more efficient deployment of sophisticated AI capabilities across edge devices and cloud infrastructure.

The company’s work on sparse neural networks and attention mechanism optimizations has yielded measurable improvements in both computational efficiency and model performance. These technical advances address critical scalability challenges that have historically limited the practical deployment of state-of-the-art AI systems.

Underlying Technical Drivers

The sustained growth in AI-adjacent semiconductor investments reflects several fundamental technical trends reshaping the industry:

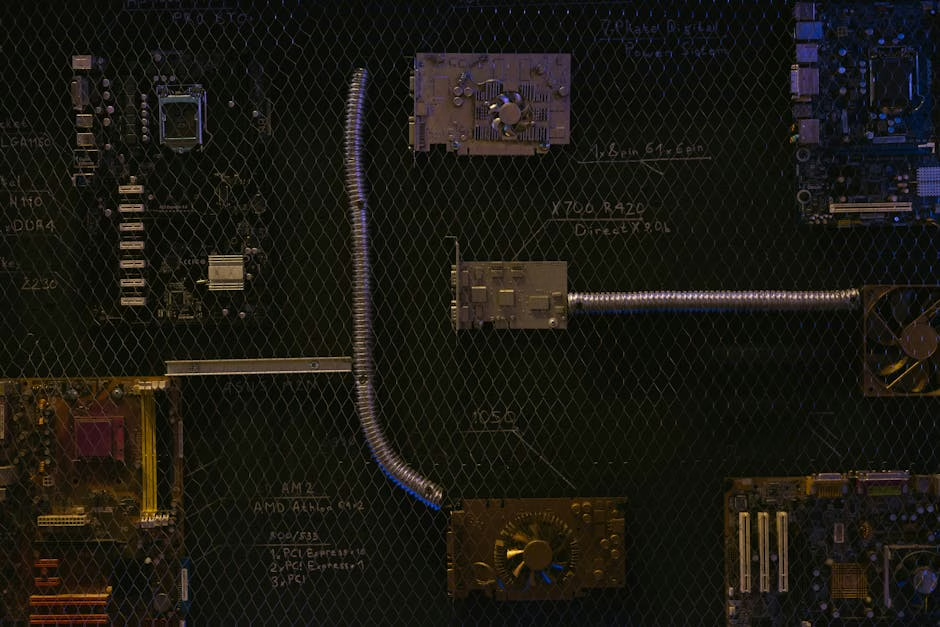

Architectural Specialization: The transition from general-purpose computing to domain-specific architectures optimized for AI workloads continues accelerating. Modern AI chips incorporate specialized memory systems, custom interconnects, and processing units designed specifically for tensor operations.

Scale and Efficiency Convergence: As model sizes continue growing—with some language models exceeding trillion-parameter thresholds—the technical requirements for training and inference infrastructure have intensified. This drives demand for more sophisticated semiconductor solutions that can handle massive parallel processing while maintaining energy efficiency.

Edge AI Integration: The proliferation of AI capabilities in edge devices requires new chip architectures that balance computational power with strict power and thermal constraints. This technical challenge is driving innovation in neuromorphic computing, quantization techniques, and novel memory architectures.

Technical Implications for Future Development

The current market dynamics reflect a deeper technical reality: AI advancement is increasingly constrained by hardware capabilities rather than algorithmic innovation. As researchers push the boundaries of model architecture—exploring mixture-of-experts systems, retrieval-augmented generation, and multi-agent frameworks—the underlying computational infrastructure must evolve correspondingly.

The semiconductor industry’s response involves developing more sophisticated memory hierarchies, implementing advanced packaging technologies for chiplet-based designs, and exploring novel computing paradigms like optical processing and quantum-classical hybrid systems.

These technical developments suggest that the current investment surge represents more than market speculation—it reflects fundamental infrastructure requirements for the next generation of AI capabilities. As neural architectures become more sophisticated and deployment scales continue expanding, the technical demands on semiconductor solutions will only intensify, supporting continued innovation and investment in this critical sector.