AI’s Technical Evolution: From Scaling Laws to Pragmatic Implementation Across Industries

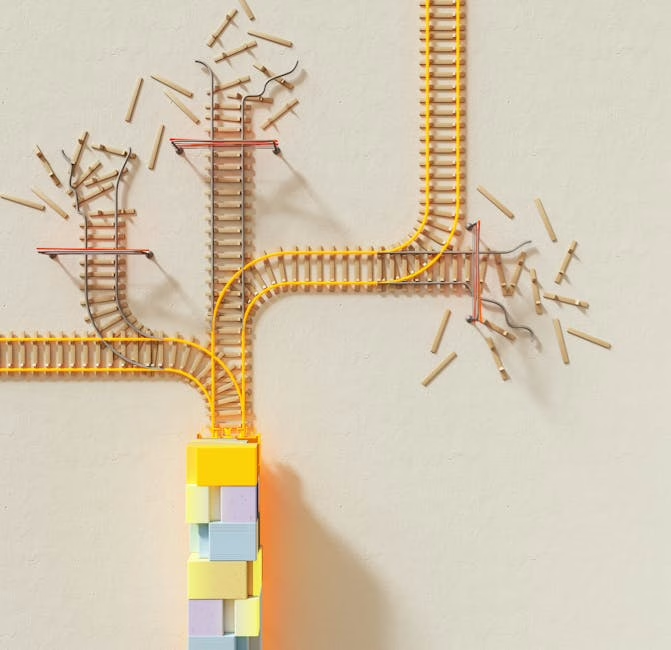

The artificial intelligence landscape is undergoing a fundamental architectural shift as we move into 2026, transitioning from the brute-force scaling paradigms that defined the previous era to more sophisticated, targeted implementations across diverse industrial sectors.

The End of Pure Scaling Dominance

The industry consensus among leading AI researchers indicates that traditional scaling laws—the principle that simply increasing model parameters and training data yields proportional performance gains—are reaching their practical limits. This technical inflection point mirrors the broader semiconductor scaling challenges that have historically driven Moore’s Law adaptations.

The shift represents a move away from the transformer architecture’s parameter explosion toward more efficient neural network designs. Research teams are now focusing on novel architectures that optimize for specific computational constraints rather than maximizing raw model size. This includes developments in mixture-of-experts (MoE) models, sparse attention mechanisms, and domain-specific neural architectures that deliver superior performance per parameter.

Pharmaceutical AI: Regulatory-Constrained Innovation

The pharmaceutical sector exemplifies this pragmatic approach, where AI implementation must navigate stringent regulatory frameworks while delivering measurable clinical outcomes. According to industry analysis from Impiricus, pharmaceutical companies have expanded AI deployment beyond traditional drug discovery pipelines into manufacturing optimization, laboratory automation, and supply chain management.

The technical challenge in pharma AI lies in developing models that maintain interpretability and audit trails—requirements that conflict with the black-box nature of many deep learning architectures. This has driven innovation in explainable AI (XAI) methodologies, particularly in areas like gradient-based attribution methods and attention visualization techniques that can satisfy FDA transparency requirements.

Manufacturing applications leverage computer vision models for quality control, while supply chain optimization employs reinforcement learning algorithms trained on historical distribution data. These implementations demonstrate how domain-specific constraints drive architectural innovation rather than generic model scaling.

Hardware Infrastructure and Market Dynamics

The semiconductor sector’s continued momentum reflects the underlying computational demands of this AI transition. While the focus shifts from training massive models to deploying efficient inference systems, the hardware requirements evolve accordingly. Edge computing architectures require specialized neural processing units (NPUs) optimized for low-power, real-time inference rather than the high-throughput training accelerators that dominated the previous scaling era.

This technical evolution drives investment patterns in AI-adjacent semiconductor companies, as the market recognizes that specialized inference hardware will become increasingly critical for practical AI deployment. The shift toward heterogeneous computing architectures—combining CPUs, GPUs, and specialized AI accelerators—represents a fundamental change in how AI workloads are distributed across computing infrastructure.

Architectural Innovation Beyond Transformers

The research community is actively exploring post-transformer architectures that address the quadratic attention complexity limitations inherent in current large language models. State space models, such as Mamba and its variants, offer linear scaling properties that make them more suitable for long-sequence processing tasks common in industrial applications.

Retrieval-augmented generation (RAG) architectures represent another pragmatic approach, combining smaller, more efficient language models with external knowledge bases. This hybrid methodology reduces computational requirements while maintaining access to current information—a critical capability for enterprise deployments where model retraining costs are prohibitive.

Integration Challenges and Human-AI Workflows

The technical challenge of 2026 centers on developing AI systems that integrate seamlessly into existing human workflows rather than replacing them entirely. This requires sophisticated interface design and context-aware computing that can adapt to varying user expertise levels and task requirements.

Multimodal architectures that process text, vision, and audio inputs simultaneously are becoming essential for these integrated systems. The technical complexity lies not just in handling multiple data types, but in developing unified representation spaces that enable coherent reasoning across modalities.

Performance Metrics Beyond Scale

As the field matures, evaluation metrics are shifting from pure accuracy measures to more nuanced assessments including energy efficiency, inference latency, and deployment robustness. These practical considerations drive architectural decisions toward models that optimize for real-world constraints rather than benchmark performance alone.

The emergence of federated learning frameworks addresses privacy and data sovereignty concerns while enabling collaborative model training across organizational boundaries. This technical approach becomes particularly relevant in regulated industries where data sharing restrictions limit traditional centralized training methodologies.

Conclusion

The AI field’s evolution from scaling-focused to application-driven development represents a natural maturation process that parallels other technology sectors. The technical innovations emerging from this transition—efficient architectures, domain-specific optimizations, and integrated deployment frameworks—will likely define the next phase of AI advancement. Success will increasingly depend on engineering excellence in deployment and integration rather than raw computational power, marking a fundamental shift in how we approach AI system design and implementation.